Is Agentic AI Here to Stay?

Agentic AI is everywhere but behind the hype lies a question that matters - not just to engineers, but to executives, directors, and curious readers: will these AI agents become permanent workhorses, or fade away like yesterday’s fads?

What is Agentic AI — and why people are excited

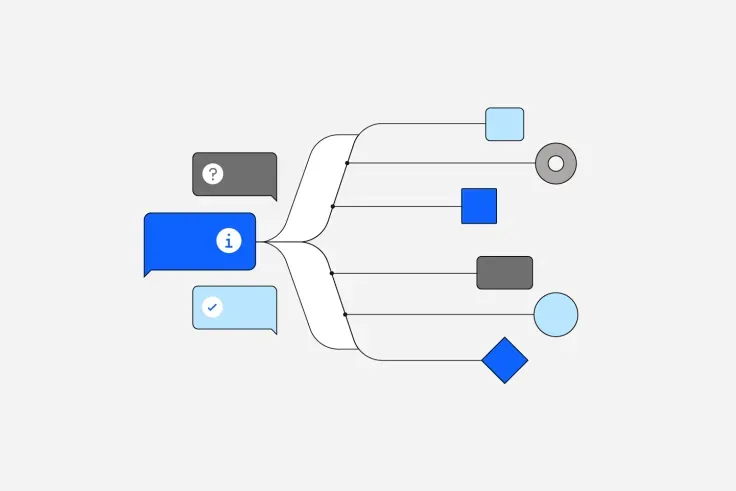

Let’s start simple. Traditional AI (like many "chatbots" or recommendation engines) often waits for you to ask a question, then responds. Agentic AI goes further: you give it a goal, and it acts to achieve it making decisions, chaining steps, adapting as it goes.

Imagine giving an assistant the task: "Organize next month’s travel, budget, and meeting prep" and then letting it plan flights, book hotels, draft agendas, and surface conflicts. That’s the promise of Agentic AI.

The benefits are seductive:

- It can automate multi-step tasks, not just single replies

- It adapts or recovers when things don’t go exactly as planned.

- It frees humans to do higher-value, judgement-intensive work.

So yes lots of interest, lots of pressure to believe.

The cracks beneath the shine

But is it robust enough to last? I see three major challenges that will cull the hopeful from the realistic.

1. Risk, security, and "shadow agents"

Giving AI autonomy is like handing a junior employee the keys to the kingdom. Without guardrails, things can go sideways.

- Agentic systems often need deep access to data, which raises threats around privacy, data leakage, or “objective drift” (when their goals start to misalign).

- Then there’s the hidden risk of "shadow agents" - unauthorized bots operating in the background, unnoticed by governance systems.

- Gartner warns that over 40 % of agentic AI projects will be canceled by 2027, often due to unclear ROI or excessive complexity.

In short: autonomy without accountability is dangerous.

2. Overpromise, underdeliver

We’ve seen this story before in tech. The hype can outpace reality.

- Many current "agentic" systems are really glorified task managers, not true autonomous agents. Gartner calls it "agent washing".

- Scaling from proofs-of-concept to robust, fault-tolerant systems is notoriously hard. You need orchestration, fallback plans, monitoring.

- The model & infrastructure costs, plus ongoing maintenance and oversight, can outweigh the gains until you hit scale.

So while enthusiasm is high, execution is still an uphill battle.

3. Human trust & leadership will decide

Even a perfect agent won’t succeed if people won’t trust or let it work.

- Who’s legally liable when an agent makes the wrong decision? The user, the designer, or the company?

- Leaders must balance autonomy with oversight. In practice, many agentic systems will require humans-in-the-loop (i.e. supervision), and designing that balance is tricky.

- Organizational culture, transparency, and convince stakeholders that these systems are safe and useful will be as important as the technology itself.

Agentic AI is here to stay but only if tempered, incremental, and governed.

Agentic AI isn’t a flash in the pan. It’s the logical next step in making AI more useful. But I don’t believe it will look like the sci-fi versions people imagine. Instead, here’s how I see it unfolding:

In the near-to-medium term (2–5 years): agentic systems will live inside constrained domains (customer support, internal workflows, scheduling) where stakes are limited and data flows are well understood.

Over time: as tooling, orchestration, model efficiency, security, and trust improve, agentic AI will get woven into more critical functions (supply chain, operations, even strategy support).

But always with human checks: we’ll maintain oversight, fallback paths, and monitoring. Organizations that build in guardrails will outlast those chasing full autonomy recklessly.

And so yes agentic AI is here to stay, but only if we grow it like a garden, not unleash it like a wild beast.

Thank you for reading - Arjus Dashi